When a company claims they have built an "autonomous AI doctor," the natural clinical reaction is skepticism. But in 2026, skepticism isn't enough; you need literacy.

The right stance is neither "AI is magic" nor "AI is useless"—it is methodology first.

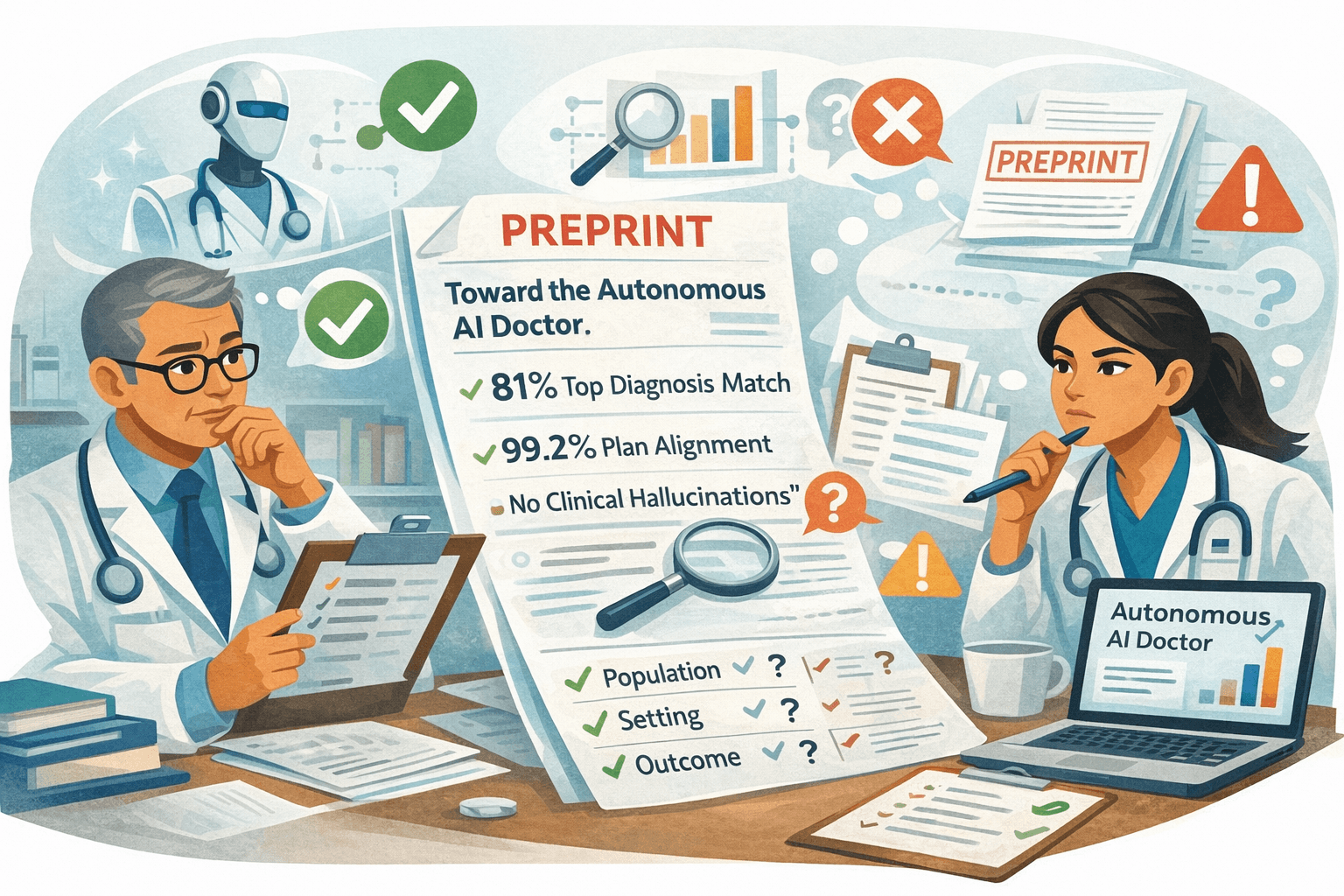

Doctronic has recently released a preprint, "Toward the Autonomous AI Doctor," claiming headline-grabbing metrics from 500 urgent-care encounters. If you haven't seen it yet, your patients soon will. Here is how to deconstruct the evidence like a pro.

What Doctronic claims (quote the numbers, then contextualise)

The preprint reports on a retrospective study of 500 consecutive urgent-care telehealth cases. The headline metrics are bold:

- 81% Top Diagnosis Match: The AI's #1 diagnosis matched the board-certified clinician's #1 diagnosis in 81% of cases.

- 99.2% Plan Alignment: The AI's suggested management plan was judged "clinically compatible" with the human plan in nearly all cases.

- "No Clinical Hallucinations": The study reports zero instances of fabricated medical facts (though "hallucination" is defined narrowly in the paper as distinct from "omission" or "error").

Note: This data comes from a preprint, meaning it has not yet completed the full peer-review process typical of major medical journals.

What those metrics do (and don’t) tell you

To the untrained eye, 99.2% sounds like "case closed." To the critical appraiser, it raises four specific questions.

1) Diagnosis concordance ≠ diagnostic accuracy

"Concordance" simply means the AI agreed with the human clinician. It does not prove the diagnosis was correct.

- If the human clinician missed a subtle presentation of sepsis and the AI also missed it, they are "concordant."

- In AI evaluation, agreeing with a fallible human is a proxy for safety, not a guarantee of truth.

2) “Plan alignment” is easier to score than nuanced safety

In an urgent care setting, many conditions (viral URTI, gastroenteritis, non-specific back pain) converge on the same plan: reassurance + fluids + safety netting.

- An AI can get the diagnosis wrong (calling it "Viral Pharyngitis" instead of "Viral URTI") but still get the plan "right" (paracetamol). This inflates the alignment score without proving diagnostic precision.

3) Hidden variable: case mix

The study was conducted in telehealth urgent care.

- This population is self-selected for lower acuity. It excludes the complex multimorbidity, frail elderly, and "undifferentiated collapse" cases that make up the bread and butter of UK General Practice.

- An AI that performs safely on "sore throats via video" is not necessarily safe for "abdominal pain in the elderly."

4) The adjudication method matters

Who decided the AI was right? The study used a "blinded LLM-based adjudication" process, verified by human experts.

- Using an AI (LLM) to mark another AI's homework is a growing trend, but it introduces circularity. If the judge model shares the same biases as the doctor model, it will rate errors as "correct."

The right checklist for any “clinically validated AI doctor”

When you see the next press release, use this checklist to assess the claim.

The "AI Doctor" Evidence Checklist

- Population: Was it 25-year-olds with iPhones (telehealth) or 85-year-olds with dementia (real world)?

- Setting: Urgent care (episodic) vs Chronic disease (longitudinal).

- Outcome: Did they measure patient outcomes (return visits, death) or just text agreement?

- Calibration: Does the AI know when to say "I don't know"?

- Guardrails: At what point does the system force a human handover?

UK translation: why this matters even if Doctronic is US-first

Doctronic is a US-centric product, but digital health has no borders.

- The "Spillover": UK patients will use the free interface to triage themselves. They will arrive at your surgery with a printout saying "The AI Doctor said I need antibiotics."

- The Shift: You are no longer the start of the diagnostic journey; you are the "second opinion" on the AI's primary assessment.

Where iatroX deliberately differs (and why that’s safer in UK practice)

At iatroX, we have made a deliberate choice not to build an "autonomous doctor."

- Brainstorm is a structured case walkthrough tool. It does not declare "The diagnosis is X." Instead, it asks: "Have you considered Y? What questions would rule it out?"

- Ask iatroX focuses on retrieval and verification. We don't want to replace your judgment; we want to fetch the NICE guideline that informs it.

We believe the safest model for 2026 is Clinician-in-the-Loop, not Clinician-out-of-the-Loop.

Practical clinician workflow: “AI for thinking, not deciding”

When a patient brings you an AI opinion, or when you use AI yourself, use this workflow:

- Broaden: Use AI to expand the differential ("What did I miss?").

- Discriminate: Use AI to generate the questions that tell the difference ("How do I distinguish A from B?").

- Verify: Check the decision-critical facts against a trusted source.

- Decide: Make the final call yourself.

- Document: Record your reasoning.

FAQ

Is Doctronic peer-reviewed? The current data comes from a preprint, which means it has been published publicly but has not yet passed the rigorous peer-review process required by major medical journals.

What does “99.2% plan alignment” mean? It means that in 99.2% of the study cases, the AI's suggested treatment (e.g., "take ibuprofen") was judged to be clinically compatible with what the human doctor suggested. It does not necessarily mean the diagnosis was identical.

How should a UK clinician use these tools safely? Treat them as "Patient-Supplied Information." Acknowledge the AI's output, but perform your own independent history and examination. Use a clinician-facing tool like iatroX to verify any claims against UK guidance before prescribing.

Prefer a tool that helps you think rather than doing it for you? Try the Brainstorm 7-step method on iatroX.