Executive summary

The direction of travel for UK healthcare is clear. The Government’s 10-Year Health Plan sets out three fundamental shifts for the NHS: from hospital to community, from analogue to digital, and from sickness to prevention. At the heart of this transformation is the ambition to embed artificial intelligence into care pathways, with the NHS App serving as a unified "digital front door" for patients (GOV.UK).

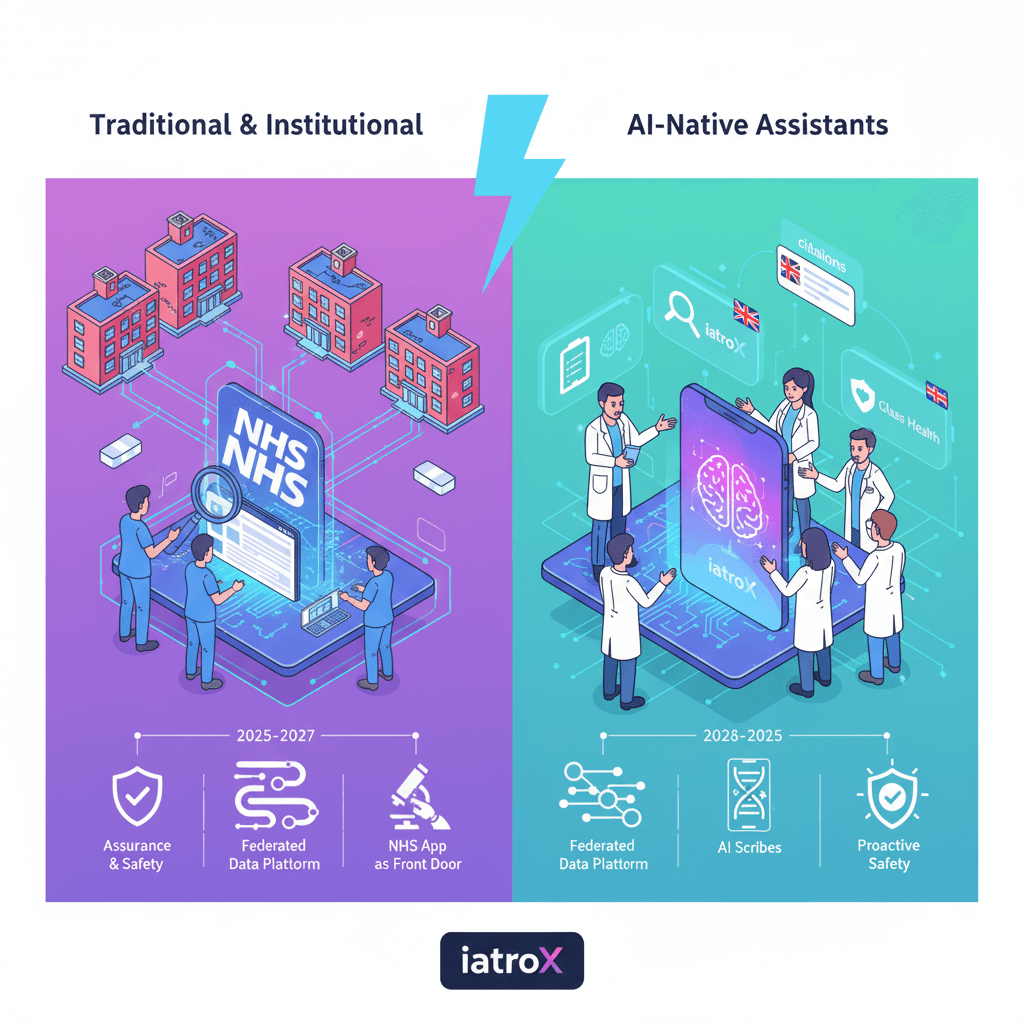

Over the next decade, we will see this vision materialise in stages. The near-term focus (2025-2027) is on rolling out proven technologies like AI scribes under the new NHS England guidance, accelerating the adoption of diagnostic AI via NICE Early Value Assessments (EVA), and testing novel AI as a Medical Device (AIaMD) in the supervised MHRA AI Airlock sandbox. The success of this journey will be determined by a robust set of enablers: the DTAC and DCB0129/0160 standards for safety and assurance, the Federated Data Platform (FDP) for infrastructure, and the AI Knowledge Repository for sharing adoption know-how.

Where we are today: baseline policies and intent

The journey began with the 2019 NHS Long Term Plan, which made "digitally-enabled care" a mainstream priority. This has now been folded into the more assertive 2025 10-Year Health Plan, which explicitly aims to make the NHS “the most AI-enabled care system in the world.” Foundational strategies like "Data Saves Lives" and the "Plan for Digital Health and Social Care" frame how data access, robust governance, and the NHS App will serve as the delivery vehicles for these new AI-powered services (GOV.UK).

What the next decade looks like (staged outlook)

2025–2027: “Prove value, reduce admin, set the guardrails”

- Ambient scribing at scale: Expect the widespread, governed adoption of AI scribes under the 2025 NHS England guidance, with a strong focus on demonstrating time-savings and adherence to DTAC and DCB safety standards.

- Early Value Assessments for AI: The NICE EVA pathway will be the primary route for introducing new diagnostic AI into the NHS, allowing for permitted use with a clear requirement for ongoing evidence generation.

- Regulatory sandboxing: The MHRA AI Airlock will move beyond its initial pilots to become the standard for testing adaptive or novel AIaMD, helping to clarify evidence requirements and post-market surveillance plans before a wide rollout.

- "Virtual hospital" pilots: The first true "NHS Online" or virtual hospital services will begin, using AI-supported triage and remote consultation pathways integrated with the NHS App.

2028–2031: “Embed AI into pathways and operations”

- Unified patient-facing records: The NHS App will evolve into a single, AI-ready front door for services, with enhanced features for triage, patient choice, and secure messaging.

- Federated Data Platform (FDP) as routine infrastructure: The FDP will become the standard for operational analytics and research-grade evaluations, enabling pathway optimisation using trustworthy, de-identified data pipelines from across trusts.

- Workforce alignment: The NHS Long-Term Workforce Plan will be in full effect, with an expanded focus on digital skills, clinical leadership in informatics, and AI literacy programmes like the Digital Academy and Topol Fellowships.

2032–2035: “Precision, prevention, and proactive safety”

- Preventive AI at population scale: The focus will shift to proactive care, with AI being used for population-level risk stratification, real-time safety surveillance, and interpreting newborn genomics data. Patient-facing AI guidance will be a standard feature in the NHS App.

- A mature assurance stack: The UK's assurance frameworks—DTAC, MHRA guidance informed by the Airlock pilots, and a mature NICE evidence process—will enable the routine commissioning of proven and cost-effective AI.

The enablers that determine success

- Assurance & procurement: The DTAC is the mandatory baseline. The DCB0129/0160 standards provide the framework for clinical safety. The NHSE Digital Clinical Safety guidance sets expectations for Board-level accountability.

- Evaluation: The NICE EVA pathway allows for adoption with evidence generation. The TEHAI framework helps assess capability and adoption, while the government's Magenta Book provides the standard for robust impact evaluation.

- Data infrastructure: The Federated Data Platform (FDP) provides the governed infrastructure for accessing data across trusts.

- Adoption know-how: The NHS AI Knowledge Repository is the national hub for case studies, "how-to" guides, and playbooks on topics like AI scribes.

The use-cases with the strongest near-term ROI

- Documentation & letters (ambient scribing): The most immediate and tangible benefit, returning time to clinicians.

- Imaging & oncology planning: AI contouring and triage tools, adopted under the NICE EVA pathway, can reduce clinical bottlenecks.

- Operational flow: Demand and capacity analytics, increasingly powered by the FDP.

- Patient support via the NHS App: Guided symptom navigation and access to trusted information via features like "My Companion."

Risks to Plan For (and Practical Mitigations)

- Clinical safety & over-reliance: Mitigate by enforcing the DCB0129/0160 standards, which mandate a clinical safety case and hazard log. Always require a "human-in-the-loop" for final decisions.

- Evidence gaps: Mitigate by using the NICE EVA and MHRA AI Airlock pathways to stage adoption, with pre-defined outcomes and clear data collection requirements.

- Public trust & data use: Mitigate by being transparent. Communicate the purposes and safeguards of the FDP clearly and publish case studies of successful, safe AI use via the AI Knowledge Repository.

- Digital exclusion: The NHS App roadmap must include a commitment to inclusive design, providing clear alternatives for those who cannot or do not wish to use digital tools, and meeting robust accessibility standards.

A pragmatic ICS/Trust roadmap (12–24 months)

- Phase 1 – Foundations (0–6 months): Map your current live AI use. Complete DTAC due diligence for any shortlisted tools. Formally stand up your DCB0129/0160 governance and nominate a Clinical Safety Officer.

- Phase 2 – Two focused pilots (6–12 months): Launch an administrative pilot (e.g., an AI scribe, following NHSE guidance) and a clinical pilot (e.g., an EVA-aligned imaging tool) with a clear evidence generation plan.

- Phase 3 – Scale with evidence (12–24 months): Publish the results of your pilots (positive or negative) to the AI Knowledge Repository. Begin to integrate successful tools with your NHS App patient flows and plan for FDP integration where relevant.

Useful resources (bookmark these)

- 10-Year Health Plan (Overview) – DHSC/NHSE

- NHS App Roadmap & Features – NHS Digital

- DTAC (Digital Technology Assessment Criteria) – NHS Transformation Directorate

- DCB0129/0160 & Digital Clinical Safety – NHS England

- MHRA AI Airlock – GOV.UK

- NICE Early Value Assessment (EVA) – NICE

- AI Knowledge Repository – NHS England Digital

- Federated Data Platform (FDP) – NHS England

FAQs

- What is the difference between large language models and large multimodal models?

- Large language models (LLMs) are trained on text. Large multimodal models (LMMs) can process and integrate information from multiple sources, including text, images, and audio.

- Is artificial intelligence in healthcare “regulated” in the UK?

- Yes, through a multi-layered system. The DTAC provides a baseline for NHS procurement, the NICE ESF sets evidence standards, and the MHRA regulates any AI that functions as a medical device.

- Are ambient scribe tools allowed in the NHS?

- Yes, but only when deployed in line with specific NHS England guidance, which requires robust safeguards, a full clinical safety case, and diligent clinician oversight.

- Does retrieval-augmented generation (RAG) remove hallucinations?

- It significantly reduces them by grounding the AI in a set of facts, but it does not eliminate them entirely. Human review of outputs remains essential.